What is ETL / ELT?

ETL stands for ‘Extract, Transform and Load’ and refers to the collecting and organising of your data to ready it for analysis, which then provides you with valuable insight and foresight into your business, product or organisation.

So - ETL is the process whereby you:

Extract data from multiple data sources.

Transform that data by cleansing and combining the data.

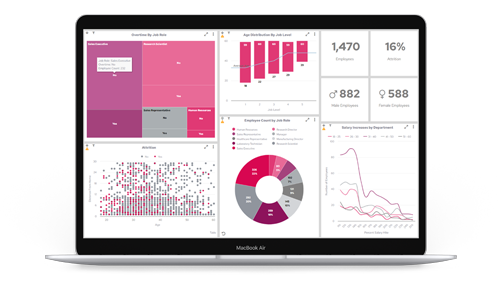

Load - put the data into a convenient location to connect to your favorite BI reporting tools such as Panitelligence's embedded analytics suite.

It is more common these days to consider an ELT process (rather than ETL), where data is extracted, loaded and then transformed. This is because the target datastore is often the most efficient place to perform data transformations.

Why do I need to ETL or ELT?

Data in any business tends to exist in multiple sources. You can spend a lot of time manually wrangling it in tools like Excel. But this is slow, expensive and prone to errors. Your director of sales should be running the sales team not building spreadsheets.

Unfortunately, in recent years this has often become increasingly complicated. Your data exists in a mixture of traditional on-premise locations and SaaS cloud applications. You need to combine and aggregate the data to get the full picture.

When do I need to use ETL / ELT?

If you need real-time or near real-time data - then you are going to need to first set up your ETL or ELT process. It’s no good running today with last month’s data. You need to react quickly to problems before they happen. Your teams need to understand the value of keeping their data up to date and accurate. They need to see that it drives value.

Your business spends a lot of money on applications, collecting data and storing the data. You must maximise the value of the data by allowing the business to become data driven. Stop guessing and back up gut feel with facts.

What are the different options for setting up ETL/ELT processes?

There are a couple of different options for businesses wanting to utilise their data.

Data Scientists – it’s very difficult to pin down exactly what a data scientist is, but one thing most in this field have in common is that they are data wranglers. They pull data from multiple sources, databases, API’s flat files and combine it – usually into a data warehouse and simplify it to allow it to be understood.

Tools – it is becoming more common for business users to want to do more for themselves, with less reliance on data scientists or developers. Tech teams tend to be blockers, they are a scarce resource. You don’t have time to scope and wait for a project to be delivered. You need to pull data transform it and make a decision.

Technology should be an enabler, tools must allow you to consume data securely and accurately when you need to. Data exploration should be carried out by the business users with the domain expertise, this allows better decisions to be made / problems to be spotted faster.

What are the best ETL tools?

To choose the best tools for ETL, you’ll need to ask the following questions:

- Is our data held in an on-premise database?

- Do I need data held in cloud SAAS platforms?

- Do we require real time data?

- Does data from multiple sources require joining and aggregating?

The following ETL tools can help you in your journey to extract value from the data you generate.

Matillion

Matillion recently launched Matillion ETL to sit alongside its traditional data loader applications. Matillion provides a large number of data connectors, allowing non-technical users to pull data from multiple data stores, combine, cleanse and push to a cloud-based database.

Matillion started as an engine to push data to Redshift and Snowflake, but has added endpoints in Azure, Big Query and most recently, Delta Lake in Databricks. Matillion is a cloud-based solution that runs in your cloud environment. It can be deployed on AWS, GCP and Azzure.

The key benefits of Matillion are that it is a cloud-based service that you can scale depending on your needs. It can pull data from both cloud and on-premise data sources. It can be used by less technical users, but does require cloud engineering and technical expertise to get the best out of it.

Price: starts at $15k per annum.

Panoply

Panoply is a tool designed to be used by anyone to pull data from cloud SAAS data APIs without any technical knowledge. It has its own inbuilt database (Redshift) which the data is pulled into. It is a very useful tool if you just want to pull the data into a datastore without having to provision your own database.

Panoply is essentially a very quick way to Extract and Load data. It does not provide any transformation functions, but does allow you to link this to a visualisation tool very quickly. Panoply can also be used in conjunction with other ETL tools, for example: Blendo and Stitch.

Pirce: starting at $449 per month

Informatica

Informatica is designed for moving data securely at scale between databases. It provides very sophisticated Transformation tools. It requires specialist users (often external consultancies) to configure and manage so it’s really targeted at enterprise-scale organisations, rather than start-ups and scale-ups.

Price: starts at $24k per annum

Talend

Talend is an open-source application that allows you to create sophisticated ETL procedures using its range of in-built connectors. Talend is more complex tool requiring technical expertise to connect.

Price: starts at $12k per annum

Fivetran

Fivetran is a simple cloud-based ELT engine. It has some very clever tooling which will autogenerate schemas optimised for reporting engines.

Fivetran has a very comprehensive list of supported connectors which can be used as both sources and destinations. This combines SAAS cloud applications, Cloud-based data warehouses and traditional RDBMS.

Price: on request

Stitch

Stitch is an ETL product that is often used in conjunction with cloud data warehousing. It is designed to be very simple and easy to use. Often data pipelines are created by non-technical users using Stitch. It is particularly useful when connecting to Cloud SAAS data API’s.

Stitch has an integration to allow you to push data directly into a Panoply data store.

Price: starting at $100 per month

Segment

Segment is a cloud-based solution that allows you to stream data into cloud-based data warehouses.

Segment is built to be event-based and allows data to be replicated into a data store and then allow connections to a visualisation tool. Segment is built to predominantly push data to Snowflake as a cloud data warehouse solution.

Price: starting at $100 per month

Blendo

Blendo is an ETL product that is often used in conjunction with cloud data warehousing. It is designed to be very simple and easy to use. Blendo has an integration to allow you to push data directly into a Panoply data store.

Price: starting at $120 per month

Summary: what are the best ELT / ELT tools for you?

Which ELT tools are best for your organisation will depend to some extent on the size of your business, your budget and your technical resource.

But ultimately these ELT tools have made it easier for businesses of any size to combine data from multiple sources and prepare it for analysis within your favourite Business Intelligence tools.

As the move to hosted cloud applications increases so does the need to pull the data into a simple structure. These tasks used to sit with highly skilled technicians. But the tools above have enabled business users to take control (and control costs) of data extraction, enabling you to utilise your data for valuable BI insights, faster, cheaper and more easily than ever before.